How To Use ChatGPT Stories Plugin To Write Creative Stories | Install and Activate the Plugin

ChatGPT started as a generative AI program that uses its existing database to generate texts, ideas, and other creative forms of writing based on the prompt that the users provide it with. However, it has come a long way since its first release and with the GPT-4 model, it has taken its creativity prowess a notch higher, with the help of plugins of course. One such plugin is the Stories plugin which can write a whole story, however long you want it to be, based on your prompt.

Below, we will describe how you can use the ChatGPT Stories plugin to write creative stories and what types of prompts to use to get different results.

How Do I Install and Activate for Using ChatGPT Stories Plugin?

To get started, you need to make sure that you have installed the Stories plugin and activated it. Then comes the prompting part. So, here’s how you can install and activate the plugin.

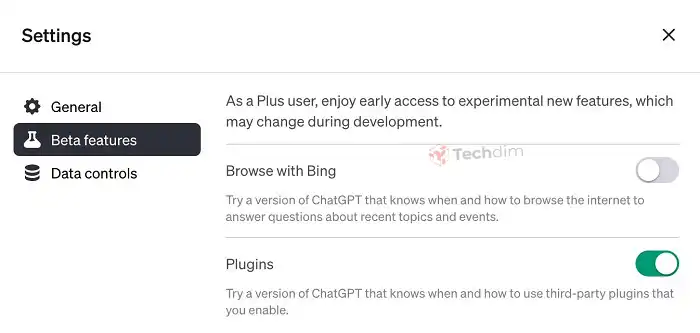

- Subscribe to ChatGPT Plus and log in to your ChatGPT account.

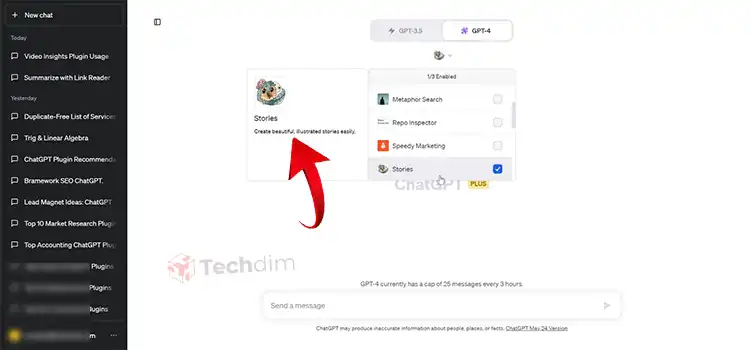

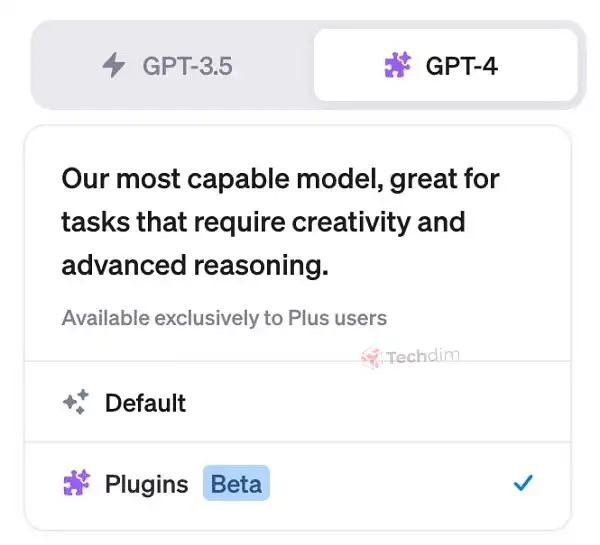

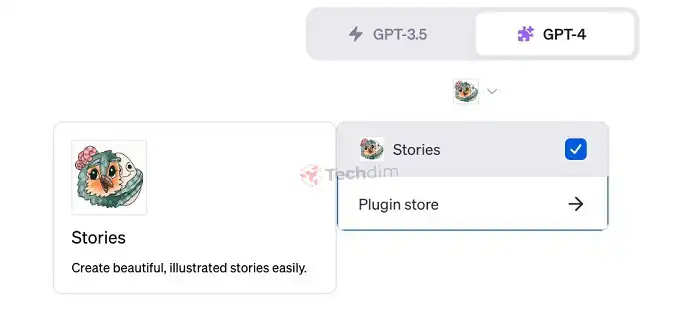

- On the ChatGPT home screen, select the GPT-4 model from the dropdown menu and then click on the Plugins

- From the dropdown menu, go to the Plugin Store

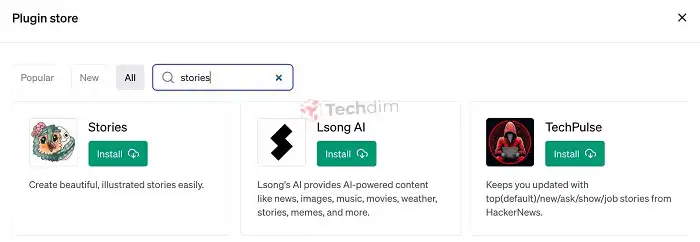

- Now search for the Stories plugin

- When you find it, click on Install

- Now go back to the ChatGPT home screen, open the same dropdown menu, and tick the Stories checkbox to enable it.

Read on to find out how to prompt it to get the best result.

Prompt ChatGPT Stories to Write Creative Stories

To write a simple story about a character or an event, you can use prompts like this:

“Write a story about a young boy who got lost in the woods and was found by a wolf who is actually friendly”

“Create a fantasy story about a world where cows are the leaders of our world”

You can also ask it to write a creative and unique story for events like this:

“Write a story for my daughter’s birthday about a princess who got lost and later found by her parents”

You can also ask the Stories plugin to create educational stories with these prompts:

“Write an educational story about why it’s important to plant trees”

“Write a story that teaches kids about the solar system in a fun way”

Using Detailed Prompts

The amazing thing about the Stories plugin is that it can take in detailed prompts and craft long stories as well. for that, you should include a few more things in your prompt, like:

The main character, the antagonist, setting, time period, genre, main theme, overall mood of the story, etc.

Conclusion

Writing stories with ChatGPT is exciting because you can use a prompt template, outline your story, create chapters, write them in detail, and get guidance for improvement. So, there are endless possibilities of getting creative while still being fully unique. However, you must engineer your prompt to get the best results. In short, ChatGPT makes writing stories easy. Follow the steps in this guide, and with prompt engineering, you can bring your creative vision to life effortlessly.

Subscribe to our newsletter

& plug into

the world of technology